LEAD-ME Summer Training School Warsaw 2021 Programme

Eye tracking in media accessibility research - methods, technologies and data analyses

5-9 July 2021 - Online

- Day1: Monday 5th July

- Day2: Tuesday 6th July

- Day3: Wednesday 7th July

- Day4: Thursday 8th July

- Day5: Friday 9th July

- Tutors for Students' Projects

- Speaker Bios

- Abstracts

Monday 5th July

| Time (CET) | |

|---|---|

| 09:00 - 10:00 | Keynote - Paivi Majaranta, Vision for Augmented Humans |

| 10:00 - 10:15 | Coffee Break |

| 10:15 - 12:30 | Lecture - Izabela Krejtz, Experimental Designs in Eye Tracking Studies |

| 12:30 - 13:30 | Lunch Break |

| 13:30 - 15:45 | Workshop - Adam Cellary, Webcam-base Eye Tracking |

| 15:45 - 16:00 | Coffee Break |

| 16:00 - 18:00 | Hands-on Session - Ideas Creation, Pitch Session, Research questions & Hypotheses |

Tuesday 6th July

| Time (CET) | |

|---|---|

| 09:00 - 10:00 | Lecture - Miroslav Vujičić and Uglješa Stankov, Tourism Accessibility 4.0 - A Transition of e-Accessibility in Tourism Towards a More Inclusive Future |

| 10:00 - 10:15 | Coffee Break |

| 10:15 - 12:30 | Workshop - Krzysztof Krejtz, Statistical Analysis of Eye Tracking Data |

| 12:30 - 13:30 | Lunch Break |

| 13:30 - 15:45 | Workshop - Andrew T. Duchowski, Eye Tracking Data Analytic Pipeline |

| 15:45 - 16:00 | Coffee Break |

| 16:00 - 18:00 | Hands-on Session - Method Design, Procedure Design, Stimuli Collection |

Wednesday 7th July

| Time (CET) | |

|---|---|

| 09:00 - 10:00 | Keynote - Jan-Louis Kruger, Audiovisual Translation as multimodal mediation |

| 10:00 - 10:15 | Coffee Break |

| 10:15 - 12:30 | Lecture - Breno Silva & Agnieszka Szarkowska, Using Linear Mixed Models to Analyse Subtitle Reading |

| 12:30 - 13:30 | Lunch Break |

| 13:30 - 15:45 | Hands-on Session - Experimental Procedure Implementation |

| 15:45 - 16:00 | Coffee Break |

| 16:00 - 18:00 | Workshop - Craig Hennessey, Introduction to Eye-Tracking Equipment: Setup, Recording, and Analysis |

Thursday 8th July

| Time (CET) | |

|---|---|

| 09:00 - 10:00 | Keynote - Pilar Orero , Future of Media Accessibility and Research |

| 10:00 - 10:15 | Coffee Break |

| 10:15 - 12:30 | Lecture - Anna Matamala, Qualitative Research Methods in Media Accessibility: Focus Groups and Interviews |

| 12:30 - 13:30 | Lunch Break |

| 13:30 - 14:45 | Workshop - Karolina Broś, Literature review - reading and writing eye-tracking research papers |

| 14:45 - 15:45 | Lecture - Anna Jankowska, Using Translation Process Methods in Audiovisual Translation and Media Accessibility Research |

| 15:45 - 16:00 | Coffee Break |

| 16:00 - 18:00 | Hands-on Session - Data Collection & Analysis |

Friday 9th July

| Time (CET) | |

|---|---|

| 09:00 - 11:15 | Hands-on Session - Students' Projects Presentations |

| 11:15 - 11:30 | Coffee Break |

| 11:30 - 12:30 | Lecture - Chris Hughes, Eye Tracking Research in Virtual Reality |

| 12:30 - 13:45 | Farewell & Winter School announcement - Pilar Orero, Agnieszka Szarkowska, Krzysztof Krejtz, Juanpe Rica |

Tutors for Students' Projects

- Agnieszka Szarkowska (University of Warsaw, Poland)

- Izabela Krejtz (SWPS University of Social Sciences and Humanities, Poland)

- Andrew T. Duchowski (Clemson University, SC, USA)

- Alina Secara (University of Vienna, Austria)

- Breno Silva (University of Warsaw)

- Karolina Broś (University of Warsaw)

- Katarzyna Wisiecka (SWPS University of Social Sciences and Humanities, Poland)

- Beata Lewandowska (RealEye, Poland)

- Krzysztof Krejtz (SWPS University of Social Sciences and Humanities, Poland)

Speaker Bios

Päivi Majaranta (Tampere University, Finland)

Päivi Majaranta is a Senior Research Fellow at Tampere University, Finland, where she teaches courses on human-technology interaction. Her research interests include gaze interaction, multimodal interfaces, user experience, animal-computer interaction. She holds MSc in Computer Science (1998) and PhD in Interactive Technology (2009) from the University of Tampere. She is the former president and current member of the management board of the COGAIN Association, a member of the ACM ETRA Steering Committee, and a member of the editorial board of the Journal of Eye Movement Research. She has over 60 peer-reviewed publications in the field of human-computer interaction.

Jan-Louis Kruger (Macquarie University, Australia)

Jan-Louis Kruger is professor and Head of the Department of Linguistics at Macquarie University. He started his research career in English literature with a particular interest in the way in which Modernist poets and novelists manipulate language, and in the construction of narrative point of view. From there he started exploring the creation of narrative in film and how audiovisual translation (subtitling and audio description) facilitates the immersion of audiences in the fictional reality of film.

In the past decade his attention has shifted to the multimodal integration of language in video where auditory and visual sources of information supplement and compete with text in the processing of subtitles. His research uses eye tracking experiments (combined with psychometric instruments and performance measures) to investigate the cognitive processing of language in multimodal contexts. His current work looks at the impact of redundant and competing sources of information on the reading of subtitles at different presentation rates and in the presence of different languages.

Pilar Orero (Universidad Autónoma de Barcelona, Spain)

Pilar Orero is the INDRA-ADDECCO Chair in Accessible Technology and full professor of Audiovisual Translation at the Universitat Autònoma de Barcelona (Spain), where she leads the TransMedia Catalonia Research Group. She acts as Gian Maria Greco's Supervisor in the MSCA H2020 project "Understanding Media Accessibility Quality" (UMAQ).

She is a world-leading scholar in media accessibility with vast experience in standardisation and policy-making, She is a scientific/organizing committee member of many conferences, including Media4All, ARSAD, and Video Games for All. She has delivered, upon invitation, more than 15 plenary lectures and 30 guest lectures all over the world, including at the 9th United Nations Conference of the States Parties to the Convention on the Rights of Persons with Disabilities (New York, 2016).

She either coordinated or participated in more than 40 national and international research projects, of which more than 20 were on media accessibility. She has published over 70 papers and 10 books. Her works are some of the most widely-cited publications in the field of media accessibility, of which she is one of the founding scholars.

Izabela Krejtz (SWPS University of Social Sciences and Humanities, Poland)

Izabela Krejtz is an associate professor of psychology at SWPS University of Social Sciences and Humanities, Warsaw, Poland. She is a recognized researcher in the field of cognitive psychopathology, educational psychology, and daily experience measured with the momentary ecological assessment. She is an author of several dozens of international scientific publications among which a large amount was based on eye tracking experiments. She regularly teaches experimental research methodology and eye tracking method applications in media research.

Adam Cellary (RealEye, Poland)

Adam Cellary, graduated from Warsaw University of Technology, Robotics on Mechatronics. Interested in eye-tracking from the technical point of view since 2012. Entrepreneur and Developer by heart.

Andrew T. Duchowski (Clemson University, SC, USA)

Andrew T. Duchowski is a professor of Computer Science at Clemson University. He received his baccalaureate (1990) from Simon Fraser University, Burnaby, Canada, and doctorate (1997) from Texas A&M University, College Station, TX, both in Computer Science. His research and teaching interests include visual attention and perception, eye tracking, computer vision, and computer graphics. He is a noted research leader in the field of eye tracking, having produced a corpus of papers and a monograph related to eye tracking research, and has delivered courses and seminars on the subject at international conferences. He maintains Clemson's eye tracking laboratory, and teaches a regular course on eye tracking methodology attracting students from a variety of disciplines across campus.

Krzysztof Krejtz (SWPS University of Social Sciences and Humanities, Poland)

Krzysztof Krejtz, Ph.D., is psychologist at SWPS University of Social Sciences and Humanities in Warsaw, Poland, where he is leading the Eye Tracking Research Center. In 2017 he was a guest professor at Ulm University, in Ulm, Germany. He gave several invited talks at e.g., Max-Planck Institute (Germany), Bergen University (Norway), Lincoln University Nebraska (USA). He has extensive experience in social and cognitive psychology research methods and statistics. In his research he focuses on the use of eye tracking method and developing novel metrics which may capture the dynamics of attention and information processing processes (transitions matrices entropy, ambient-focal coefficient K), dynamics of attention process in the context of Human Computer Interaction, multimedia learning, media user experience, and accessibility. He is a member of ACM Symposium on Eye Tracking Research and Application (ACM ETRA) Steering Committee and he was a general chair of ACM ETRA 2018 and 2019.

Breno Silva (University of Warsaw, Poland)

Breno Silva holds two MAs in Education (Universities of Warsaw and Nottingham) and a PhD in Applied Linguistics (University of Warsaw). He is a teacher and researcher. As a teacher, Breno holds workshops on the basics of statistics and on research methodology in applied linguistics. As a researcher, his main interests include lexical learning through reading and writing. Currently, Breno is involved in applied linguistics and psycholinguistics research, both concerning the learning of second and third language vocabulary.

Agnieszka Szarkowska (University of Warsaw, Poland)

Agnieszka Szarkowska is University Professor in the Institute of Applied Linguistics, University of Warsaw, and Head of the AVT Lab research group. She is a researcher, academic teacher, ex-translator, and translator trainer. Her research projects include eye tracking studies on subtitling, audio description, multilingualism in subtitling for the deaf and the hard of hearing, and respeaking. She now leads an international research team working on the project “Watching Viewers Watch Subtitled Videos”. Agnieszka is also the Vice-President of the European Association for Studies in Screen Translation (ESIST), a member of European Society for Translation Studies (EST) and an honorary member of the Polish Audiovisual Translators Association (STAW).

Miroslav Vujičić (University of Novi Sad, Serbia)

Miroslav Vujičić is associate professor at the University of Novi Sad, Faculty of Sciences. Main field of interest is decision making processes, project management, product development, cultural tourism and has proficiency in data gathering, analysis and interpretation of mathematical and statistical methods. He has published 26 research papers at Scopus indexed journals and has more than 274 citations in the Scopus database. Programme Leader, BA Hons tourism. RVP for Eastern Europe in the ITSA network. Department coordinator for international affairs and students and staff mobility. He is the main evaluator for impact assessment of European Capital of Culture Novi Sad 2022. He is an experienced project manager and researcher with several international projects (EXtremeCLimTwin, CULTURWB, DiCultYouth, euCULTher, WATERTOUR).

Uglješa Stankov (University of Novi Sad, Serbia)

Uglješa Stankov is an Associate Professor at the Department of Geography, Tourism and Hotel Management, Faculty of Sciences, University of Novi Sad. His main research areas are the strategic role of information technology in tourism experiences and location intelligence in business activities. Due to the growing negative impact that inadequate use of information technology has on the digital well-being of consumers, the focus of his recent research is on the "calm" design of interactive systems in tourism and hospitality, as well as on technology-assisted mindfulness (e-mindfulness). Uglješa actively cooperates with researchers and professional organizations from the country and the world and currently participates in several international projects. He has published more than 150 scientific papers and four books.

Anna Matamala (Universidad Autónoma de Barcelona, Spain)

Anna Matamala, BA in Translation (UAB) and PhD in Applied Linguistics (UPF), is an associate professor at Universitat Autònoma de Barcelona. Currently leading TransMedia Catalonia, she has participated and led projects on audiovisual translation and media accessibility. She has taken an active role in the organisation of scientific events (M4ALL, ARSAD), and has published in journals such as Meta, Translator, Perspectives, Babel, Translation Studies. She is currently involved in standardisation work.

Anna Jankowska (University of Antwerp, Belgium)

Anna Jankowska, PhD, is a Professor at the Department of Translators and Interpreters of University of Antwerp and former Assistant Lecturer in the Chair for Translation Studies and Intercultural Communication at the Jagiellonian University in Krakow (Poland). She was a visiting scholar at the Universitat Autònoma de Barcelona within the Mobility Plus program of the Polish Ministry of Science and Higher Education (2016-2019). Her recent research projects include studies on audio description process, mobile accessibility and software.

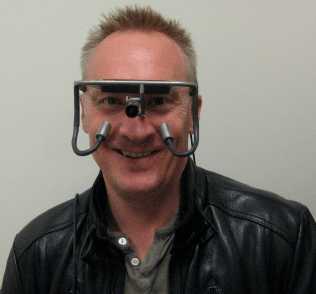

Christopher Hughes (Salford University, UK)

![]()

Dr Chris Hughes is a Lecturer in the School of Computer Science at Salford University, UK. His research is focused heavily on developing computer science solutions to promote inclusivity and diversity throughout the broadcast industry. This aims to ensure that broadcast experiences are inclusive across different languages, addressing the needs of those with hearing and low vision problems, learning difficulties and the aged. He was a partner in the H2020 Immersive Accessibility (ImAc) Project.

Karolina Broś (University of Warsaw, Poland)

Karolina Broś, PhD is an assistant professor at the Institute of Applied Linguistics, University of Warsaw. She specialises in theoretical and experimental approaches to language research. Her recent projects include behavioural perception studies and EEG experiments focused on language processing in Spanish and other languages. She is currently running a project funded by the National Science Center on the acoustic, auditory and gestural correlates of consonantal contrasts in Spanish. She has published in several of the best journals in the field of linguistics, such as Journal of Linguistics, Phonetica, Journal of Neurolinguistics or Phonology. At the Institute of Applied Linguistics, she is actively engaged as an M.A. supervisor and tutor, and gives seminars on experimental approaches to linguistic research, translation and interpreting, as well as bilingualism and the brain.

Craig Hennessey (University of British Columbia, British Columbia Institute of Technology, and Gazepoint)

Dr. Craig Hennessey PhD P.Eng, completed his doctorate at the University of British Columbia in 2008. Dr. Hennessey’s Master’s thesis involved the creation of a single camera based eye-tracker, while his doctoral work was on extending eye-tracking from 2D screens to 3D volumetric displays. After graduation he cofounded the eye-tracking company Gazepoint where he continues to develop innovative solutions in the field of eye-tracking.

Abstracts

Vision for Augmented Humans

Eyeglasses once revolutionized human vision by offering an easy, non-invasive way to compensate for deficiencies in vision. Similarly, multimodal wearable technology may act as a facilitator to non-invasively augment human senses, action, and cognition - seamlessly, as if the enhancements were part of our natural abilities. In many scenarios, gaze plays a crucial role due to its unique capability to convey the focus of interest. Gaze has already been used in assistive technologies to compensate for impaired abilities. There are lessons learned that can be applied in human augmentation. In this talk, I will summarize some key lessons learned from the research conducted over the past decades. I will also discuss the role of gaze in multimodal interfaces and examine how different types of eye movements, together with other modalities, such as haptics, can support this intention. I will end with a call for research to realize the vision for an augmented human.

Audiovisual Translation as multimodal mediation

The world of audiovisual media has changed on a scale last seen with the shift away from print to digital photography. VOD has moved from an expensive concept limited by technology and bandwidth, to the norm in most of not only the developed world, but also as an accelerated equaliser in developing countries. This has increased the reach and potential of audiovisual translation to facilitate intercultural communication, although automated means of creating subtitles poses some threats.

While the skills required to create AVT have come within reach of a large groups of practitioners due to advances in editing software and technology, with many processes from transcription to cuing being automated, research on the reception and processing of multimodal texts has also developed rapidly. This has given us new insights into the way viewers, for example, process the text of subtitles while also attending to auditory input as well as the rich visual code of film. This multimodality of film, although being acknowledged as one of the unique qualities of translation in this context, is also often overlooked in technological advances. When the emphasis is on the cheapest and simplest way of transferring spoken dialogue to written text, or visual scenes to auditory descriptions, the complex interplay between language and other signs is often overlooked.

Eye tracking provides a powerful tool for investigating the cognitive processing of viewers when watching subtitled film with research in this area drawing on cognitive science, psycholinguistics and psychology. I will present a brief description of eye tracking in AVT as well as the findings of some recent studies on subtitle reading at different subtitle presentation rates as well as in the presence of secondary visual tasks.

The Future of Media Accessibility and its Research: Leaving No One Behind

With the risk of sounding "Mystic Meg" the presentation will look at some facts that will impact research topics on Media Accessibility. The EU legislative and political frameworks will be the transposition across Europe of the EU Media Accessibility Directive (AVMSD) and the European Accessibility Act (EAA). The need for objective research based data towards drafting accessibility service national standards will be next. The new legislation requests both quantity and quality of access services, hence national standards for benchmarking, regulating and reporting will be a matter of urgency. Media accessibility will be fully mainstreamed as any industrial service where the pull is legislation like Health and Safety. The growing number of accessibility services will offer plenty of scope in terms of training and research. Intelligent technology will allow for both personalisation of services, and the development of the Common User Profile, not losing sight on data desegregation and fairness issues. Leaving the clinical disability model of accessibility user classification for a capabilities framework should lead to revising some results and start new studies. The immersive media formats should offer enough research questions for all, from all methodological approaches. Internet of Things will also have an impact in the production, distribution, and reception of media access services: New working practices, applying blockchain to access services copyright, and the explosion of sound as a media object will keep us busy in the next coming years. The expansion of media accessibility services -beyond broadcast and movies- into other industrial sectors such as Education,Tourism, and Risk Reduction Management. And finally all the research and training should follow the UN Sustainable Development Cooperation Framework, where the first principle is to work within a Human Rights context and the second is "Living No One Behind".

Experimental Designs in Eye Tracking Studies

The lecture will recap the most important issues of experimental eye tracking study methodology including experimental designs within-subjects, between-subjects and mixed-designs and hypotheses testing. The lecture will introduce key concepts of eye tracking methodology: short review of available types of eye trackers, their pros and cons in the context of different usages, key information which can be obtained with the eye movements tracking. I will also introduce the most commonly used metrics used in eye tracking research.

Webcam-based Eye Tracking Method

The workshop will cover the most important aspects of webcam eye tracking methodology.

- What is webcam eye tracking?

- What are the key differences when using glasses, screen-mounted or webcam as input?

- How to gather ET data, what to expect when hiring a panel?

- What results expect from ET sessions (gaze-based data vs. fixation based data)?

- Diving into results (heatmaps, recordings, fixation plots)

The workshop will end with Q&A Session

Eye Tracking Data Analytic Pipeline

This tutorial gives a short introduction to experimental design in general and with regard to eye tracking studies in particular. Additionally, the design of three different eye tracking studies (using stationary as well as mobile eye trackers) will be presented and the strengths and limitations of their designs will be discussed. Further, the tutorial presents details of a Python-based gaze analytics pipeline developed and used by Drs. Duchowski and Gehrer. The gaze analytics pipeline consists of Python scripts for extraction of raw eye movement data, analysis and event detection via velocity-based filtering, collation of events for statistical evaluation, analysis and visualization of results using R. Attendees of the tutorial will have the opportunity to run the scripts of an analysis of gaze data collected during categorization of different emotional expressions while viewing faces. The tutorial covers basic eye movement analytics, e.g., fixation count and dwell time within AOIs, as well as advanced analysis using gaze transition entropy. Newer analytical tools and techniques such as microsaccade detection and the Index of Pupillary Activity will be covered with time permitting.

Statistical Analysis of Eye Tracking Data

The course will focus on analysis of data from eye tracking experiments in R a computational language for statistics. We will cover topics from data frames management and formatting, analysis of visual attention distribution (analysis based on Area of Interests), to dynamics of visual attention process (e.g., discerning ambient - focal attention). Fundamental eye tracking metrics will be analysed with parametric statistical tests, eg. t-tests or ANOVA (of within- between- and mixed- designs) and linear regression (with moderation and mediation analysis). Participants of the course will receive ready to use R scripts with the most common statistical analysis of eye tracking data in R as well as scripts for preparing publication-ready visualizations of statistically significant effects. Note that no prior knowledge of programming in R is required for this workshop.

Using Linear Mixed Models to Analyse Subtitle Reading

Using eye-tracking methods to research the reading of subtitles by viewers often warrant controlling for many confounding variables. However, it may be impossible to control for all these variables, even assuming that they are known to researchers. More traditional statistical methods such as t-tests exacerbate the problem. The issue is that, to run such statistical analyses, the data need to be aggregated so that each participant has one data point per dependent variable. In doing so, variance within the data is lost: for example, different subtitle characteristics may affect results differently, and t-tests cannot account for this. One solution is to use linear mixed models (LMMs). In this workshop, using SPSS, we compare two analyses of the same dataset: one using a t-test; another using LMMs. In the process, we introduce essential theoretical aspects of LMMs and highlight some of their advantages over traditional statistical methods.

Tourism Accessibility 4.0 - A Transition of e-Accessibility in Tourism Towards a More Inclusive Future

Tourists are diverse consumers, including a large body of people with disabilities that all face physical, sensory, cognitive and cultural barriers in service provision and many tourism settings, including all travel phases. On the other hand, tourism as a technology-dependent industry relies heavily on information technology in service delivery that could further hamper the co-creation of tourist experiences for people with disabilities. However, all these barriers are situations that could be mitigated or even turned into possibilities with the recent advancement in Tourism 4.0 technologies, such as the Internet of Things (IoT), Big Data Analytics, Artificial Intelligence (AI), Blockchain, Location-based Services or Virtual and Augmented Reality Systems. Thus, this paper identifies gaps in the current e-accessibility practises in tourism industries that are usually narrow and task-oriented to identify new possibilities brought by Tourism 4.0 technologies that focus on interoperability, virtualization, decentralization, real-time data gathering and analysis capability, service orientation, and modularity. We discuss further research directions and questions for researchers that will allow the creation of more inclusive tourism that will be able to provide more meaningful and stimulating experiences accessible to all types of consumers.

Qualitative Research Methods in Media Accessibility: Focus Groups and Interviews

Focus groups and interviews are two research methods often used to gather data in user-centric media accessibility research projects. Focus groups and interviews allow researchers to gather the subjective points of view of participants and are often used to complement quantitative data. This session will take a very practical approach: the main features of focus groups and interviews will be presented, and specific advice on how to plan, develop and analyse data gathered through these qualitative methods will be given. Examples from funded H2020 projects will be presented to illustrate how these methods have been used in media accessibility research.

Using Translation Process Methods in Audiovisual Translation and Media Accessibility Research

Different AVT (e.g., subtitling, dubbing, voice-over) and MA (e.g. audio description, subtitling for the deaf and hard of hearing) modes may be defined both as product and process. While there is a growing body of research into AVT&MA products, the process is still unexplored. Translation Process Research (TPR) essentially “seeks to answer one basic question: by what observable and presumed mental processes do translators arrive at their translations?” (Jakobsen, 2017, p. 21). To answer this question TPR uses a wide range of research methods which draw from psychology, corpus linguistics, psycholinguistics, anthropology, neuroscience, and writing research. In this session we will present the different methods (e.g. think aloud protocol, screen recording, keylogging, heart rate variability) used in TPR research and discuss how they can be applied to AVT&MA.

Reading and writing eye-tracking research papers

Eye-tracking research involves solid methodological preparation and thorough literature review. Before designing your own study, you should look at the way eye-tracking experiments are conducted and reported. You should also make sure you understand the gist of a given paper and are able to find the necessary information by looking in the right places. Apart from comprehension, you should also learn how to convert your ideas into a proper research proposal. Methodological preparation, theoretical underpinnings, hypotheses and expected results should be well-thought and written down before conducting your study.

During this workshop, we will look at the structure of research papers reporting eye-tracking experiments and identify the main points. We will focus on the analysis of the abstract, method and results, and on using this knowledge to plan and report a future study.

Introduction to Eye-Tracking and Biometric Experiments: Equipment Setup, Recording, and Analysis

Research applications that involve eye-tracking require at the most basic level both determining where a subject is looking and what they are looking at. The application specific analysis can take place after these two steps. This talk will cover the best practices for the setup of eye-tracking equipment including equipment positioning, external environmental factors, participant variability, eye feature tracking, and calibration. Once a point-of-gaze is determined, the gaze position will be linked to the content viewed including static (text, images) and dynamic (videos, software) screen content, web content and mobile device screens. Finally, techniques for the initial processing of eye-tracking data will be covered including fixation maps, heatmaps, AOI’s and raw data export. Along with the focus on eye-tracking, the complementary biometric signals such as pupil size, heart rate and galvanic skin response will be discussed.